Next Gen Search, Part 2: Pushing the limits

In the last part, we looked at how I built a new backend for storing inverted indexes for Pepperminty Wiki, which allows for partial index deserialisation and other nice features that boost performance considerably.

Since the last post, I've completed work on the new search system - though there are a few bits around the edges that I still want to touch up and do some more work on.

In this post though, I want to talk about how I generated test data to give my full-text search engine something to chew on. I've done this before for my markov chain program I wrote a while back, and that was so much fun I did it again for my search engine here.

After scratching my head for a bit to think of a data source I could use, I came up with the perfect plan. Ages ago I downloaded a Wikipedia dump - just the content pages in Wikitext markup. Why not use that?

As it turns out, it was a rather good idea. Some processing of said dump was required though to transform it into a format that Pepperminty Wiki can understand, though. Pepperminty Wiki stores pages on disk as flat text files in markdown, and indexes them in pageindex.json. If pageindex.json doesn't exist, then Pepperminty Wiki rebuilds it automagically by looking for content pages on disk.

This makes it easy to import batches of new pages into Pepperminty Wiki, so all we need to do is extract the wiki text, convert to markdown, and import! This ended up requiring a number of different separate steps though, so let's take it 1 at a time

First, we need a Wikipedia database dump in the XML format. These are available from dumps.wikimedia.org. There are many different ones available, but I suggest grabbing one that has a filename similar to enwiki-20180201-pages-articles.xml - i.e. just content pages - no revision history, user pages, or additional extras. I think the most recent one as of the time of posting is downloadable here - though I'd warn you that it's 15.3GiB in size! You can see a list of available dump dates for the English Wikipedia here.

Now that we've got our dump, let's extract the pages from it. This is nice and easy to do with wikiextractor on GitHub:

nice -n20 wikiextractor enwiki-20180201-pages-articles.xml --no_templates --html --keep_tables --lists --links --sections --json --output wikipages --compress --bytes 25M >progress.log 2>&1

This will parse the dump and output a number of compressed files to the wikipages directory. These will have 1 JSON object per line, each containing information about a single page on Wikipedia - with page content pre-converted to HTML for us. The next step is to extract the page content and save it to a file with the correct name. This ended up being somewhat complicated, so I wrote a quick Node.js script to do the job:

#!/usr/bin/env node

const readline = require("readline");

const fs = require("fs");

if(!fs.existsSync("pages"))

fs.mkdirSync("pages", { mode: 0o755 });

// From https://stackoverflow.com/a/44195856/1460422

function html_unentities(encodedString) {

var translate_re = /&(nbsp|amp|quot|lt|gt);/g;

var translate = {

"nbsp":" ",

"amp" : "&",

"quot": "\"",

"lt" : "<",

"gt" : ">"

};

return encodedString.replace(translate_re, function(match, entity) {

return translate[entity];

}).replace(/&#(\d+);/gi, function(match, numStr) {

var num = parseInt(numStr, 10);

return String.fromCharCode(num);

});

}

const interface = readline.createInterface({

input: process.stdin,

//output: process.stdout

});

interface.on("line", (text) => {

const obj = JSON.parse(text);

fs.writeFileSync(`pages/${obj.title.replace(/\//, "-")}.html`, html_unentities(obj.text));

console.log(`${obj.id}\t${obj.title}`);

});

This basically takes the stream of JSON object on the standard input, parses them, and saves the relevant content to disk. We can invoke it like so:

bzcat path/to/*.bz2 | ./parse.js

Don't forget to chmod +x parse.js if you get an error here. The other important thing about the above script ist hat we have to unescape the HTML entities (e.g. >), because otherwise we'll have issues later with HTML conversation and page names will look odd. This is done by the html_unentities() function in the above script.

This should result in a directory containing a large number of files - 1 file per content page. This is much better, but we're still not quite there yet. Wikipedia uses wiki markup (which we converted to HTML with wikiextractor) and Pepperminty Wiki uses Markdown - the 2 of which are, despite all their similarities, inherently incompatible. Thankfully, pandoc is capable of converting from HTML to markdown.

Pandoc is great at this kind of thing - it uses an intermediate representation and allows you to convert almost any type of textual document format to any other format. Markdown to PDF, EPUB to plain text, ..... and HTML to markdown (just to name a few). It actually looks like it shares a number of features with traditional compilers like GCC.

Anyway, let's use it to convert our folder full of wikitext files to a folder full of markdown:

mkdir -p pages_md;

find pages/ -type f -name "*.html" -print0 | nice -n20 xargs -P4 -0 -n1 -I{} sh -c 'filename="{}"; title="${filename##*/}"; title="${title%.*}"; pandoc --from "html" --to "markdown+backtick_code_blocks+pipe_tables+strikeout" "${filename}" -o "pages_md/${title}.md"; echo "${title}";';

_(See this on explainshell.com - doesn't include the nice -n20 due to a bug on their end)_

This looks complicated, but it really isn't. Let's break it down a bit:

find pages/ -type f -name "*.html" -print0

This finds all the HTML files that we want to convert to Markdown, and delimits the output with a NUL byte - i.e. 0x0`. This makes the next step easier:

... | nice -n20 xargs -P4 -0 -n1 -I{} sh -c '....'

This pushes a new xargs instance into the background, which will execute 4 commands at a time. xargs executes a command for each line of input it receives. In our case, we're delimiting with NUL 0x0 instead though. We explicitly specify that we can 1 command per line of input though, as xargs tries to optimise and do command file1 file2 file3 instead.

The sh -c bit is starting a subshell, in which we execute a small wrapper script that then calls pandoc. This is of course inefficient, but I couldn't find any way around spawning a subshell in this instance.

filename="{}";

title="${filename##*/}";

title="${title%.*}";

pandoc --from "html" --to "markdown+backtick_code_blocks+pipe_tables+strikeout" "${filename}" -o "pages_md/${title}.md";

echo "${title}";

I've broken the sh -c subshell script down into multiple liens for readability. Simply put, it extracts the page title from the filename, converts the HTML to Markdown, and saves it to a new file in a different directory with the .md replacing the original .html extension.

When you put all these components together, you get a script that converts a folder full of HTML files to Markdown. Just like with the markov chains extraction I mentioned at the beginning of this post, Bash and shell scripting really is all about lego bricks. This is due in part to the Unix philosophy:

Make each program do one thing well.

There is more to it, but this is the most important point to remember. Many of the core utilities you'll find on the terminal follow this way of thinking.

There's 1 last thing we need to take care of before we have them in the right format though - we need to convert the [display text](page name) markdown-format links back into the Wikipedia [[internal link]] format that Pepperminty Wiki also uses.

Thankfully, another command-line tool I know of called repren is well-suited to this:

repren --from '\[([^\]]+)\]\(([^):]+)\)' --to '[[\1]]' pages_md/*.md

It took some fiddling, but I got all the escaping figured out and the above converts back into the [[internal link]] format well enough.

Now that we've got our folder full of markdown files, we need to extract a random portion of them to act as a test for Pepperminty Wiki - as the whole lot might be a bit much for it to handle (though if Pepperminty Wiki was capable of handling it all eventually that'd be awesome :D). Let's try 500 pages to start:

find path/to/wikipages/ -type f -name "*.md" -print0 | shuf --zero-terminated | head -n500 --zero-terminated | xargs -0 -n1 -I{} cp "{}" .

(See this on explainshell.com)

This is another lego-brick style command. Let's break it down too:

find path/to/wikipages/ -type f -name "*.md" -print0

This lists all the .md files in a directory, delimiting them with a NUL character, as before. It's better to do this than use ls, as find is explicitly designed to be machine-readable.

.... | shuf --zero-terminated

The shuf command randomly shuffles the input lines. In this case, we're telling it that the input is delimited by the NUL byte.

.... | head -n500 --zero-terminated

Similar deal here. head takes the top N lines of input, and discards the rest.

.... | xargs -0 -n1 -I{} cp "{}" .

Finally, xargs calls cp to copy the selected files to the current directory - which is, in this case, the root directory of my test Pepperminty Wiki instance.

Since I'm curious, let's now find out roughly how many words we're dealing with here:

cat data_test/*.md | wc --words

1593190

1.5 million words! That's a lot. I wonder how quickly we can search that?

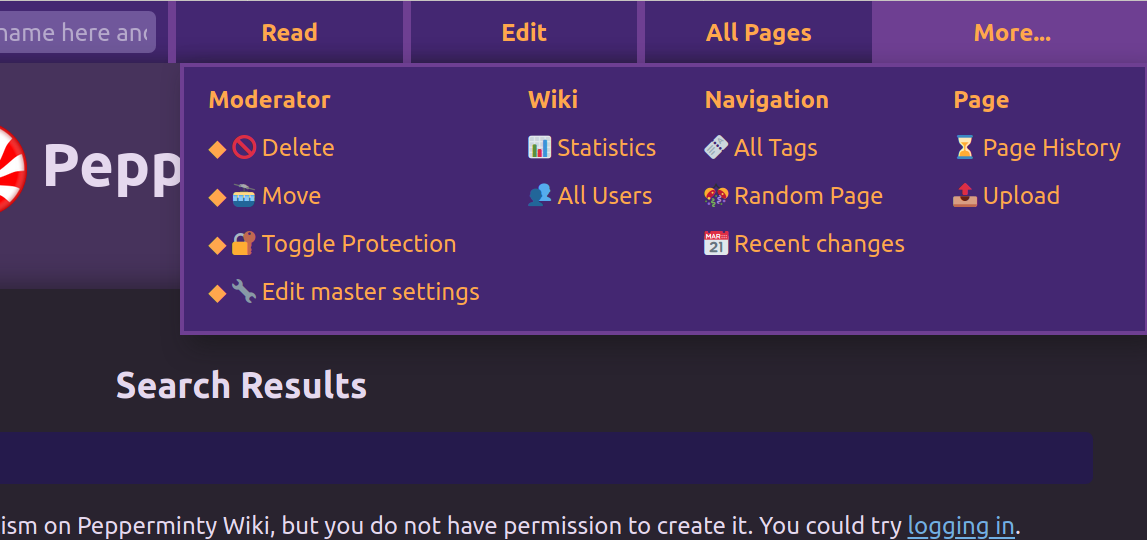

24.8ms? Awesome! That's so much better than before. If you're wondering about new coat of paint in the screenshot - Pepperminty Wiki is getting dark theme, thanks to prefers-color-scheme :D

I wonder what happens if we push it to 2K pages?

This time we get ~120ms for 5.9M total words - wow! I wasn't expecting it to perform so well. At this scale, rebuilding the entire index is particularly costly - so if I was to push it even further it would make sense to implement an incremental approach that spreads the work over multiple requests, assuming I can't squeeze any more performance out the system as-is.

The last thing I want to do here is make a rough estimate about time time complexity the search system as-is, given the data we have so far. This isn't particularly difficult to do.

Given the results above, we can calculate that at 1.5M total words, an increase of ~60K total words results in an increase of 1ms of execution time. At 5.9M words, it's only ~49K words / ms of execution time - a drop of ~11K words / ms of execution time.

From this, we can speculate that for every million words total added to a wiki, we can expect a ~2.5K words / ms of execution time drop - not bad! We'd need more data points to make any reasonable guess as to the Big-O complexity function that it conforms to. My guess would be something like $O(xN^2)$, where x is a constant between ~0.2 and 2.

Maybe at some point I'll go to the trouble of running enough tests to calculate it, but with all the variables that affect the execution time (number of pages, distribution of words across pages, etc.), I'm not in any hurry to calculate it. If you'd like to do so, go ahead and comment below!

Next time, I'll unveil the inner working of the STAS: my new search-term analysis system.

Found this interesting? Got your own story about some cool code you've written to tell? Comment below!

Mailing List

Mailing List

Twitter

Twitter

Reddit

Reddit

Facebook

Facebook